“Are we justified in assuming a model of nature for which no calculable simulation is possible?”

—Zuse

Ever since I read Licklider’s 1960 masterpiece, I’ve been obsessed with his vision, the same vision that made me curious about AI. He dreamt of a future where humans and computers would be “coupled together very tightly,” a partnership that would “think as no human brain has ever thought.” More than sixty years on, I feel like we’re finally seeing the tendrils of that symbiotic future unraveling, especially in the most abstract of human endeavors: mathematics. The very culture of mathematical formalism, the way we discover, communicate, and even feel mathematical truth, is undergoing a tectonic shift, and it’s all thanks to the tools we’re building.

I’ve recently discovered—through Tao’s—blog a paper by Heather Macbeth that really struck a chord with me, articulating so much of what I’ve been mulling over. She dives deep into the stylistic and philosophical ravines that separate traditional prose mathematics from its increasingly common computer-formalized counterpart. Her central argument, and one I find profoundly compelling, is that abstraction itself plays a fundamentally different role when we bring machines into the proving process. In the old world of pen and paper, there’s a natural, almost instinctual, hesitation to generalize too aggressively. The intellectual courage is complemented by the pragmatic acknowledgment of the cognitive overload it places on the reader, and the sheer, painstaking labor required to ensure that a sweeping abstraction doesn’t conceal a fatal flaw or, just as bad, render the core intuition opaque.

But, as Macbeth points out, these barriers, these hesitations, largely dissolve in a formal setting. When I’m navigating a formalized mathematical argument, the game changes. As a reader I can instantly inspect definitions, trace the lineage of a lemma, or click-to-verify that a claim indeed holds in the precise abstract context it claims to. The machine handles the meticulous, often mind-numbing, bookkeeping. And as a programmer of mathematics—a role Licklider would have relished—I find myself liberated. I can soar above the drudgery of symbol-shuffling and rote calculation, focusing my energies on the grand architecture of an argument, on erecting the conceptual scaffolding. I trust the machine to manage the low-level joinery with an accuracy that far surpasses my own fallible human memory and attention. This brings to mind Perlis’s wonderfully sharp observation:

“You think you know when you learn, are more sure when you can write, even more when you can teach, but certain when you can program.”

When we can articulate a mathematical idea with such clarity that a machine can parse, internalize, and verify it, our own understanding crystallizes, achieving a new, almost unnerving, level of certainty.

This man-computer symbiosis is precisely what Licklider was getting at:

“men will set the goals, formulate the hypotheses, determine the criteria, and perform the evaluations. Computing machines will do the routinizable work that must be done to prepare the way for insights and decisions in technical and scientific thinking.”

And the beauty of it, as Macbeth suggests and as my own experiences confirm, is that this partnership is leading to a richer, more textured form of mathematical storytelling. We can now weave with ease in diverse forms of reasoning—intricate symbolic derivations, exhaustive computational case analyses—without shattering the narrative flow or eroding the trust between author, reader, and the increasingly indispensable machine. I wrote this last year, after a long day of coding:

April 14, 2024

It makes me genuinely happy to see mathematicians of Terence Tao’s caliber vibe coding Python libraries to dispatch the kind of tedious calculations that used to consume days or weeks of his very own life. Aided by LLMs for the initial boilerplate, he hacks together a proof-of-concept. It’s not elegant, he admits, but “elegance is not the point here; rather, that it is automated.” This, right here, is the Macbeth thesis in action. The style of proof changes because the process of proving has changed.

And Tao doesn’t stop there. His initial tool rapidly evolves into a more general, “flexible proof assistant,” explicitly designed to echo some of the interactive features of mature systems like Lean, and crucially, powered by the symbolic algebra workhorse, SymPy. The focus shifts to a semi-automated, interactive dance: the human provides the high-level tactics—the strategic leaps of intuition—and the machine diligently executes the calculations, verifies the steps, and presents the evolving state of the proof. This iterative dialogue, this ready-to-hand between human insight and machine rigor, is where the magic of Licklider’s symbiosis truly comes alive.

This theme of self-improving systems and the automated generation of reasoning took an even more bold turn in another recent paper I encountered. I’ve been waiting for a pure, AlphaZero-style approach to reasoning LLMs, and this paper, in many ways, delivers. The core idea is: eliminate reliance on human-curated data entirely. Forget supervised fine-tuning on mountains of existing proofs or question-answer pairs. Here, a single LLM plays both “proposer” (generating its own mathematical tasks) and “solver.” It dynamically crafts, validates, and learns from its own bespoke curriculum, all within a self-play loop, with verifiable rewards coming from the cold, hard truth of code execution. It’s like teaching a child chess by having them invent their own puzzles and then rewarding them for solving them correctly—a daunting, but potentially incredibly rewarding, pedagogical strategy (it kinda did its job in my case). The system operationalizes fundamental reasoning modes—deduction, abduction, induction—all grounded in the concrete reality of (program, input, output) triplets, using Python as the arbiter of truth. And, fascinatingly, the authors report the emergence of intermediate planning in the LLM’s outputs, a kind of ReAct-style internal monologue appearing as comments and step-by-step logic within the generated code. It’s almost as if the machine is learning to show its work—”The aim is to outsmart all these groups of intelligent machines and less intelligent humans. This is for the brains behind the future.” — not for a human grader, but for itself, as a scaffold for more complex reasoning.

This trajectory, from Licklider’s speculative vision to Tao’s pragmatic tool-building and the cold self-play of “Absolute Zero,” points toward a future where the nature of mathematical work is deeply altered. It’s a future that also brings to mind the pioneering, though perhaps less globally recognized, work of Konrad Zuse. His 1969 treatise, though penned decades before the current AI boom, wrestled with astonishingly prescient questions. Zuse, an engineer by training, was driven by the practical need to automate calculations, but his thinking quickly transcended mere engineering. He posited a universe that was, at its core, computational—a calculating space where physical laws might be explicable in terms of discrete, automata-like operations.

Zuse grappled with the tension between continuous physical theories and the discrete nature of computation. He explored how differential equations could be viewed from an automaton theory perspective, and how even concepts like Maxwell’s equations or gravitation might be reinterpreted in a digital, algorithmic framework. He envisioned “digital particles” and “cellular automatons” as fundamental building blocks, questioning whether nature itself might be “digital, analog or hybrid.” He wrote:

“The question therefore appears justified whether data processing can have no more than an effectuating part in the interplay or whether it can also be the source of fruitful ideas which themselves influence the physical theories.”

Zuse even touches on the philosophical implications for causality and information theory, wondering if “the assumption of valid determination only in the positive time direction is not influenced in the least by the dissolution of physical laws into the laws of probability.” His musings on the “information content” of the cosmos and the limits of simulating an infinitely fine reality with finite computational resources feel startlingly contemporary—just look at Wolfram’s writings and, to some extent, mine. Zuse was asking, in essence, if the universe itself is a kind of computer, and if so, what are the rules of its program? While his specific models of digital particles might seem primitive today, his foundational inquiry—”Is nature digital?“—and his attempts to reconcile physics with computational principles laid some of the earliest groundwork for what is now known as digital physics. The very notion that “objects and elementary dimensions of physics must not be complemented by the concept of information, but rather should be explained by it,” as the afterword to the re-edition of his work states, is to me a profound idea we shouldn’t abandon so lightly.

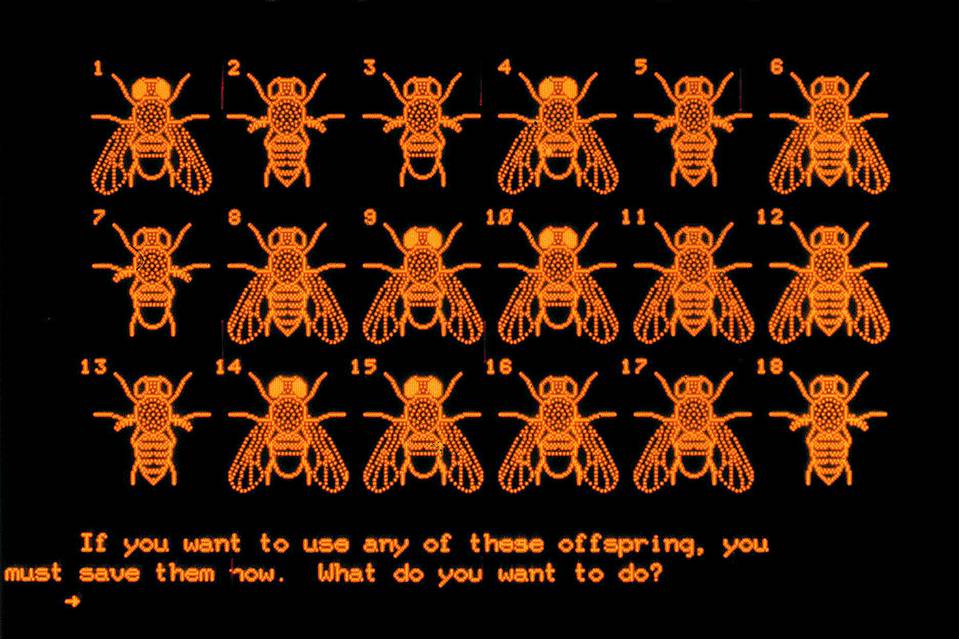

Today, we are building the tools that Zuse could only dream of, tools that allow us to go beyond mere calculation and reason with and about the formal structures of mathematics in entirely new ways. The “software,” to use Zuse’s anachronistic but apt term, is finally beginning to catch up with the “hardware” of our mathematical intuitions. Sadly, most students still do math in much the same way early computer scientists wrote assembly code. If I had learned SymPy in high school, I would have been a god by now. Fortunately, the current generation has Claude, so its offspring might very well become the gods of tomorrow.